User Metrics – Important Ranking Factors in 2016

Contents

Google is getting better at determining which sites are propped up solely by link schemes. Certainly, in 2016, Google will still be counting links as a primary method of ranking your site. However, a larger emphasis will be placed on determining whether your links are “legitimate”. Your links will be measured by several user metric factors to determine whether you’re gaining links based on interest in your site, or whether you’re simply buying link packages to manipulate your position in the SERPs.

User Metrics

Google’s ability to detect whether your link profile is “natural” has greatly escalated in the last few years, and will only continue to grow in 2016. Receiving links, when out of balance with user metrics, will trigger Google’s algorithms and filter your site from the SERPs. User signals will either legitimize or expose your link portfolio as manipulative.

Branded Searches

Are people searching for your brand? One would assume, if you have thousands upon thousands of links to your site, that you are a brand and that people would actually be searching for you. In the SEO world, for instance, consider these brands: Moz, SEOBook and Search Engine Land. Whenever I’m researching something and I want to know what Rand Fishkin says about a particular topic, I add “Moz” to my query. If I want to recall something Aaron Wall said, I’ll often stick “SEObook” in the query. And if I’m interested in what Danny Sullivan has to say — yes, I’ll actually type out the entire “Search Engine Land” along in the query.

Are people searching for your brand? One would assume, if you have thousands upon thousands of links to your site, that you are a brand and that people would actually be searching for you. In the SEO world, for instance, consider these brands: Moz, SEOBook and Search Engine Land. Whenever I’m researching something and I want to know what Rand Fishkin says about a particular topic, I add “Moz” to my query. If I want to recall something Aaron Wall said, I’ll often stick “SEObook” in the query. And if I’m interested in what Danny Sullivan has to say — yes, I’ll actually type out the entire “Search Engine Land” along in the query.

These three sites have a tremendous amount of links, but they have brand searches to back it up. People not only link to these sites using the brand keyword, they find the sites using the brand keyword. Again, I ask: are people searching for your brand? There is a certain percentage of branded searches that should exist for a particular percentage of branded incoming links. If people are linking, then people should be searching. I can’t imagine a scenario that would cause an avalanche of links to your site, but wouldn’t also cause an equal amount of searches for your site.

Traffic Sources

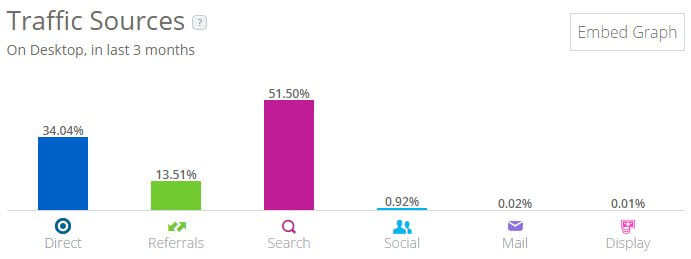

Is your site a popular one? Or are you entirely depending on Google to make it popular? The problem with depending on Google to make it popular, is that you need links to succeed. If Google is your only source of traffic, how is it so many people are linking to you? The first question is this — can Google determine where your traffic comes from? And if it can, how does that mesh with your linking patterns, if your only traffic originates from Google?

Is your site a popular one? Or are you entirely depending on Google to make it popular? The problem with depending on Google to make it popular, is that you need links to succeed. If Google is your only source of traffic, how is it so many people are linking to you? The first question is this — can Google determine where your traffic comes from? And if it can, how does that mesh with your linking patterns, if your only traffic originates from Google?

I believe that Google knows if you’re a high traffic site, and if you don’t have high traffic (but do have a large amount of links), that is unnatural and your site would be filtered from the SERPs. Before we discuss the potential penalties, let’s examine whether Google could actually know if you have a certain amount of traffic.

Google owns the Chrome browser. As of today, Chrome has a 27.66% market share. That’s a huge sampling, if they were to tap into that. Alexa.com has everyone’s traffic — and Google has over half a million pages from Alexa in their search results, which would indicate that Google has access to traffic signals from Alexa. Compete.com, an Alexa competitor, also shows traffic data. It wouldn’t be hard to imagine Google piecing together a site’s traffic from a dozen or more sources.

So then, if you’re receiving thousands, or even tens of thousands of links to your site, why do you have no traffic? If your only traffic source is Google, then your thousands of links are disqualified. A legitimate site would normally receive hundreds or thousands of visitors for every one blog post that has a link to the site. When the opposite is true, that there are hundreds of links for every one visitor, Google can determine that the link profile is unnatural.

Time Spent on Site

Does Google know how much time you spend on a particular website? Not every site has Google’s Analytics installed. How else might they determine how much time someone has spent on a site? They could, for instance, count the time between people’s searches. It is a regular occurrence when someone is looking for information, they would return to Google to continue their search after they have visited your site. By counting the difference in time, from when Google tracked someone clicking through to your site, to the time when they return to Google to continue their search, Google can determine the amount of time spent on your site.

Another source is Google’s Chrome browser. Again, 27.66% of market share is a huge sampling. It’s not hard to imagine that Google uses this data. I recall an instance where Matt Cutts was crying about Microsoft recording clicks on Google to know people’s user metrics. A little background on that situation, Internet Explorer gathered data on how users were interacting with Google’s site, and then copying what people clicked on as their ranking results. If Internet Explorer was using data from IE like this, what prevents Google from using Chrome to do the same?

Another source is Google’s Chrome browser. Again, 27.66% of market share is a huge sampling. It’s not hard to imagine that Google uses this data. I recall an instance where Matt Cutts was crying about Microsoft recording clicks on Google to know people’s user metrics. A little background on that situation, Internet Explorer gathered data on how users were interacting with Google’s site, and then copying what people clicked on as their ranking results. If Internet Explorer was using data from IE like this, what prevents Google from using Chrome to do the same?

In summary, Microsoft used “clickstream data” from IE to determine how people interact with Google, and Google cried foul. When it comes to time spent on site — this is a user metric that Google owns through Chrome. Why wouldn’t they use it? And if they actually do use it — what does it say about your site if you have an A+ link portfolio that suggests a super popular website, but nobody is spending time on your pages or even visiting your site, except through Google.

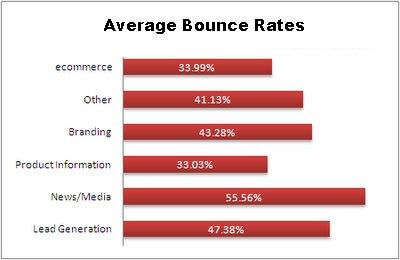

Bounce Rate

Google doesn’t even need Chrome browser data to determine if you have a high bounce rate. They also don’t need Analytics. They can get it, simply, by how quickly someone returns to Google with the same search. For instance, if someone Google’s “Best Lawnmowers” and visit your site, and 10 seconds later they’re back onto Google — continuing their search, looking at other pages — they obviously didn’t find what they were looking at on your site!

Google doesn’t even need Chrome browser data to determine if you have a high bounce rate. They also don’t need Analytics. They can get it, simply, by how quickly someone returns to Google with the same search. For instance, if someone Google’s “Best Lawnmowers” and visit your site, and 10 seconds later they’re back onto Google — continuing their search, looking at other pages — they obviously didn’t find what they were looking at on your site!

Compare this where someone visits your page, and 2 minutes later they’re back on Google. This would indicate that they spent a good deal of time on your page, and found the information interesting enough to read it before they returned to Google. In this situation, the quality of your content would be higher.

Of course, you can’t pick up this sort of thing with a few searches, but (in 2012) Google was receiving around 3.3 billion searches per day. With such a huge sampling of data, Google is able to determine average user activity — especially when it comes to bounce rate. It’s likely that each niche has its own average bounce rates. If you’ve received 100 visits, and your bounce rate is outside of the average for your particular niche, then obviously Google can determine there’s something wrong with your site.

Click-Through Anomalies

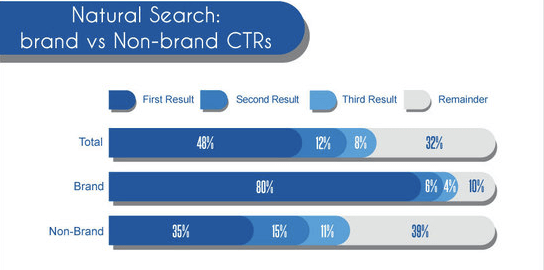

How often people click on your listing is another indication of the credibility of your site. Google knows that a #1 listing should be receiving around a 33% click-through average. If #2 is getting 40% click-through, there’s something fishy with your ranking — and Google will adjust you down. Perhaps you’re trying to rank for someone else’s brand, and while you have the links to overtake that brand — real people aren’t falling for it. They recognize the site they actually want to visit, and it’s not yours.

How often people click on your listing is another indication of the credibility of your site. Google knows that a #1 listing should be receiving around a 33% click-through average. If #2 is getting 40% click-through, there’s something fishy with your ranking — and Google will adjust you down. Perhaps you’re trying to rank for someone else’s brand, and while you have the links to overtake that brand — real people aren’t falling for it. They recognize the site they actually want to visit, and it’s not yours.

Perhaps someone ended up ranking with gibberish spun content, and it shows in the snippet. Nobody is going to click that listing. As such, Google can determine there’s something wrong with it. A long, untrustworthy domain name would also receive a lower click-through ratio.

It might also be a case of nobody even recognizing your brand. If people see trusted brands at the top of the rankings, but your domain isn’t properly branded — they might avoid your site. By working hard to make your SERP presence look flawless, interesting, even click-baitish — you can influence this factor in your favor.

Comments