Google Patent Defines Low Quality Sites – Is Yours Included? – Part 2 of 2

There are profound implications of Google’s patent that I haven’t yet discussed. The previous page specifies what a low quality website is, when averaged out between other sites in the same niche.

Other parts of the patent completely throw out links your page has received! The implications of the following section of the patent will affect many, many internet marketers:

To determine the link quality score, the link engine can perform diversity filtering on the resources. Diversity filtering is a process for discarding resources that provide essentially redundant information to the link quality engine.

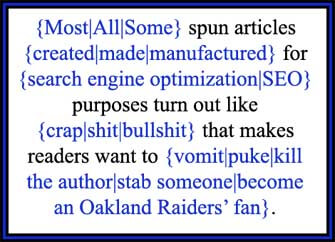

Diversity Filtering. What is it? If two documents are pointing to a page, and both pages are essentially the same information, cover the same points, and (though the wording may be different), are essentially the same document, Google will throw the links out. Whoa. This is Google’s silver bullet to spun content. Content spinning involves taking an article, providing a bunch of alternative synonyms to words, and spitting out text that is different, but essentially says the same thing. Google’s patent here invokes a diversity filter, or in other words “Yeah yeah, I heard this before. Link not counted.”

Diversity Filtering. What is it? If two documents are pointing to a page, and both pages are essentially the same information, cover the same points, and (though the wording may be different), are essentially the same document, Google will throw the links out. Whoa. This is Google’s silver bullet to spun content. Content spinning involves taking an article, providing a bunch of alternative synonyms to words, and spitting out text that is different, but essentially says the same thing. Google’s patent here invokes a diversity filter, or in other words “Yeah yeah, I heard this before. Link not counted.”

We need to keep in mind that Google takes into account synonyms, because it needs to return results based on searched synonyms. The question is this: Can Google, with all of it’s engineers and billions of dollars profit, come up with a system to identify two documents that say the same thing, with different synonyms? I’m going to say the answer to this is a resounding “Yes!” If you’re spinning content, and not making them extremely diverse, from here on out it’s a major waste of time.

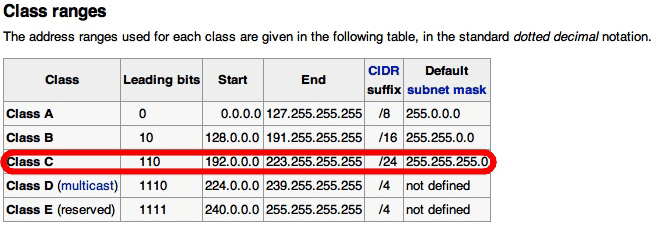

Google has a number of methods to double check whether you’re building links to yourself. The following Google patent attempts to bust people who use spun content, or links from the same IP address.

For example, the system can determine that a group of candidate resources all belong to a same different site, e.g., by determining that the group of candidate resources are associated with the same domain name or the same Internet Protocol (IP) address, or that each of the candidate resources in the group links to a minimum number of the same sites. In another example, the system can determine that a group of candidate resources share a same content context. A content context is a characterization of the content of a resource, e.g., a topic discussed in the resource or a machine classification of the resource.

Here we have Google checking out your IP address, and throwing away all the links you have from that resource to a particular page except for one. Furthermore, if all the pages are the same “content context”, for instance — rewritten articles, articles that are spun, articles that are extremely similar to each other, they’re discarded even if they’re not on the same IP address. Keep in mind that if Google is actively searching for this stuff to discount the links to your content, it can use the same data to ban your blog network, if it deems you’re trying to manipulate the search engine results.

Bad pages on your website will lower your overall link quality score. Google mentions three categories, vital, good and bad. Following is how it analyzes pages on your site to come up with an overall site score:

The system determines a link quality score for the site using the number of resources in the resource quality groups. In some implementations, the system adds the number of resources in a first resource quality group to the number of resources in a second resource quality group to determine a sum. The system then divides the sum by the total number of resources to determine the link quality score.

Remember that “resources” are pages on your website, and “resource quality groups” are how Google sorts your pages into “awesome, ok, and horrible”. Google adds the first group to the second group, (lets assume ok pages with horrible pages), and reaches a total by adding them together. Google will then divide the sum by the total pages you have on the topic, and the result is your link quality score.

In other words, lets say you have a website on any particular niche. A good page may be worth 10 points. A so-so page may be worth 3 points. You have 5 good pages, and 5 bad pages. 5 * 10 = 50 for your good pages. 5 * 3 = 15 for your bad pages. 50 + 15 = 65 total. 65 divided by 10 pages = 6.5. Your overall website therefore ranks 6.5 for your “link quality score”, if I read this correctly. Of course, the points are arbitrary — but they will be consistent between all sites.

What is the take-away from this? Low quality pages on your site hurt your rankings. Whatever the real weightings are for low quality vs high quality pages, the truth is they’re getting averaged out. It doesn’t matter if you have one awesome article for your niche, if you have 10 extremely low quality pages, they will average out to make for a low quality site. As such, your awesome page, even if it has tons of links, will be filtered from the SERPs, because your site is low quality on average.

What is the take-away from this? Low quality pages on your site hurt your rankings. Whatever the real weightings are for low quality vs high quality pages, the truth is they’re getting averaged out. It doesn’t matter if you have one awesome article for your niche, if you have 10 extremely low quality pages, they will average out to make for a low quality site. As such, your awesome page, even if it has tons of links, will be filtered from the SERPs, because your site is low quality on average.

Therefore, you’ve got two different things that are getting your site removed from search engines. The first, that your site is a low quality site, the second is that your links are from low quality sites. Following is another quote from the patent:

The system decreases ranking scores of candidate search results identifying sites classified as low quality sites. The system can decrease the ranking score of a low quality site by multiplying the ranking score by an amount based on the link quality score for the site.

Another section of the patent targets boilerplate links:

The system optionally discards candidate resources linking to the site only from a boilerplate section. For example, the system can discard a web page linking to the site only from a navigation bar of the web page.

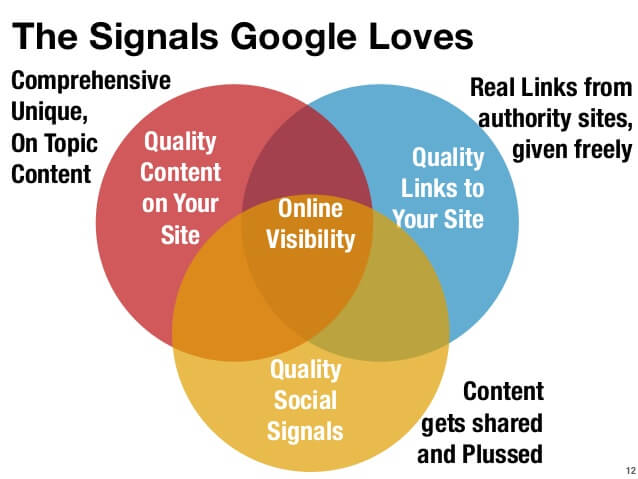

All-in-all, I’m not shocked by what this patent says. These are all things I found out the hard way. To see these things put in writing only confirms that everything mentioned above isn’t theoretical only, it is all inside Google’s algorithms right now. Following is a list of things learned from this patent.

All-in-all, I’m not shocked by what this patent says. These are all things I found out the hard way. To see these things put in writing only confirms that everything mentioned above isn’t theoretical only, it is all inside Google’s algorithms right now. Following is a list of things learned from this patent.

- The quality of your site is determined by averaging it’s quality when compared to other sites in your niche.

- Sites on the bottom end of the average are filtered from Google’s SERP results.

- Google has measures in place to combat spun or rewritten content, a “diversity filter”.

- Links from similar content are discarded. Note they may give an initial boost and then you lose rank when Google figures it out.

- Links from websites with the same IP, or same content, are discarded (and optionally penalized).

- Bad articles on your site reduce your overall site quality, negating good content.

- Navigational or boiler-type links to external websites are a waste.

For those of us who are finding spun content and low quality content a little less effective, now you know why. We should all audit our websites to remove low quality content, or to improve it to make it better. Take heed as you develop your promotional strategies and shoot for highly diverse links from diverse content.

Comments