Google Patent Defines Low Quality Sites – Is Yours Included? – Part 1 of 2

First, I want to thank SEO By The Sea for a great article on this Google patent that defines low quality sites. I want to elaborate on this article a bit, because some of the shocking implications of this patent weren’t fully discussed.

It’s hard to read a Google patent. Check out this mumbo jumbo:

In general, one aspect of the subject matter described in this specification can be embodied in methods that include the actions of receiving a resource quality score for each of a plurality of resources linking to a site; assigning each of the resources to one of a plurality of resource quality groups, each resource quality group being associated with a range of resource quality scores, each resource being assigned to the resource quality group associated with the range encompassing the resource quality score for the resource; counting the number of resources in each resource quality group; determining a link quality score for the site using the number of resources in each resource quality group; and determining that the link quality score is below a threshold link quality score and classifying the site as a low quality site because the link quality score is below the threshold link quality score.

As best as possible, I’m going to explain what I think is going on here. Thankfully Bill Slawski, the go-to guy for explaining Google patents, made some comments about what the implications are of this patent, but it didn’t go as in-depth as I’m about to go.

Things that make you a low quality site. I will comment on each line from the above summary below. The original sentence will be in italics, and thereafter my clarification.

In general, one aspect of the subject matter described in this specification can be embodied in methods that include the actions of receiving a resource quality score for each of a plurality of resources linking to a site Clarified: Google is going to take a look at all the sites that link to you, and assign each one a quality score.

In general, one aspect of the subject matter described in this specification can be embodied in methods that include the actions of receiving a resource quality score for each of a plurality of resources linking to a site Clarified: Google is going to take a look at all the sites that link to you, and assign each one a quality score.- assigning each of the resources to one of a plurality of resource quality groups, Clarified: from how “resource quality groups” are used contextually throughout the document, I can only come to the conclusion that a resource quality group is Google’s terminology for “niche”. Google is assigning the links to your site to particular niches.

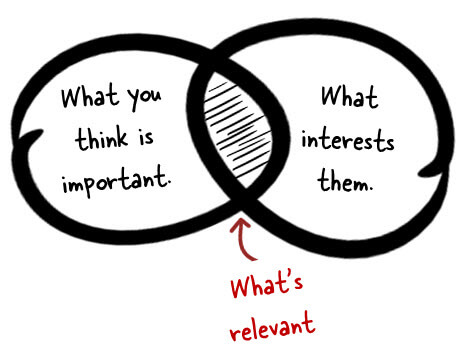

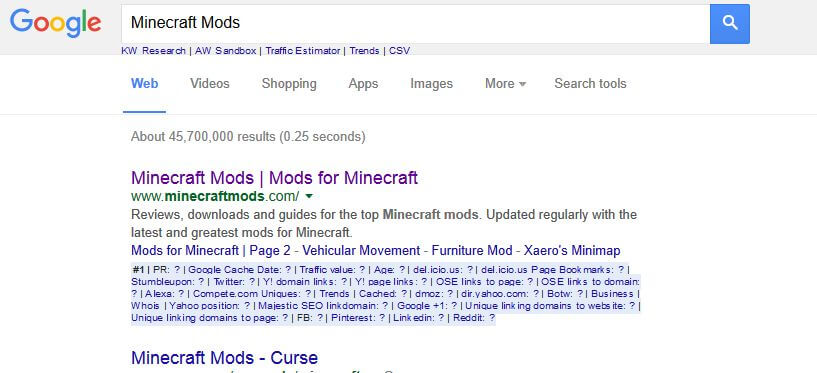

- each resource quality group being associated with a range of resource quality scores, Clarified: Every niche has different ranges for what can be considered quality pages, therefore a low quality website for current car models will be a completely different definition than a low quality website for minecraft mods. “Low quality” has a different definition for every niche.

each resource being assigned to the resource quality group associated with the range encompassing the resource quality score for the resource; Clarified: This is saying the same thing once again, every link you receive is assigned to a niche group. Every niche group has a different threshold for a link to be considered from a quality source, depending on what it is for that niche on average.

each resource being assigned to the resource quality group associated with the range encompassing the resource quality score for the resource; Clarified: This is saying the same thing once again, every link you receive is assigned to a niche group. Every niche group has a different threshold for a link to be considered from a quality source, depending on what it is for that niche on average.- counting the number of resources in each resource quality group; Clarified: Every niche has a different number of websites associated with it. The volume of sites in a niche are part of the equation.

- and determining that the link quality score is below a threshold link quality score and classifying the site as a low quality site because the link quality score is below the threshold link quality score Clarified: if your website happens to be below the median average, when compared to other sites in the same niche, you are determined to be a low quality website.

There is more to this patent, but before I continue discussing other areas it contains — lets talk about the ramifications of what we have read thus far, and what it can mean for your day-to-day SEO activities.

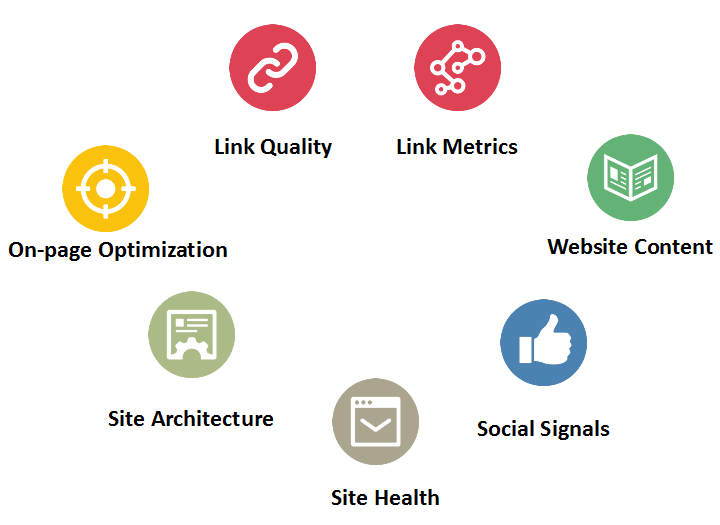

Here it is in a nutshell, this first portion of the patent. Google is weeding out low quality sites from search results. What makes a site low quality? If the quality of your site is in the bottom half of all other websites in your particular niche, you won’t rank well for anything. The idea is that Google determines the quality factors of a normal website in many ways. There is Author Rank, where Google attributes the authority of an individual in a particular space. There are social signals. There are backlinks. There is a certain level of content optimization, and page length for articles in that niche on average, etc. Take all of that, and average it out for each and every niche. Google then has a cut-off line (maybe it’s 50%, maybe its 70%), where if you’re not in (for instance) the top 30% sites for a particular niche — you’re not going to even rank, period.

Notice that this is a patent on low quality sites, and NOT low quality pages. The averages specified above will apply to your entire website, whether it falls in the top percent of your entire niche, on average. If it doesn’t,there will be a penalty filter that will remove your listings from Google’s SERPs. I think the gist is this: if your page has a tremendous amount of backlinks, this won’t matter if your site (on average) is lousy as determined by comparing your site to others in the same niche.

What is the take-away on this patent so far? I think it applies to people who bite off more than they can chew with their keywords. If you’re tackling, for instance, the medical niche — consider the quality of websites that are top ranking in that niche. But then you say, ah hah! I have this long-tail 5-word keyword that nobody is optimized for! But, it’s still a medical keyword, in the medical niche. Unless your site is above a threshold for all the other sites in the medical niche, you will be filtered as being a low quality site, even if you bombard your page with links.

Take another keyword, such as Minecraft Mods, and the trust rank of those websites (on average) is much lower. When you think of all the websites that are created by kids who enjoy playing the game, and how few links their pages receive, and the sheer volume of people discussing that topic, you can see how making it into the top half of websites created for Minecraft Mods is far easier. You are far less likely to hit a penalty filter, removing you from the top 1000 search results, on a niche like this when on average, the quality of site discussing it is much lower.

This is part one of two articles I intend to do for this Low Quality Site Patent from Google. The next page discusses more factors that the patent discusses. Please continue reading to learn more about it.

Comments